AI Reading for Monday November 25

AI is eating the world - Benedict Evans - Benedict Evans

Amazon’s moonshot plan to rival Nvidia in AI chips. - Bloomberg

They have a shot, multiplying matrices is a layup, making ML libraries like Pytorch run well is a medium lift with Anthropic's help, making a leading high performance networking fabric linking lots of chips is a moderate lift. Also, with that level of integration, a good chance they buy Anthropic making them an AI leader.

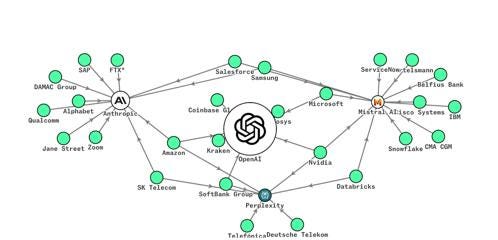

Visualizing the tangled web of relationships between AI companies. - Sherwood News

UK police leadership on AI crime threats including identity theft scams, cybercrime, CSAM, sextortion - the Guardian

UK cops spend millions on facial recognition. - The Register

Confusion over whether / what Microsoft is scraping from your Word and Excel usage to "train AI models" - The Stack

Biggest danger is maybe not the bad guys but what we accept from the supposedly good guys. Quis custodiet custodes?

Study: 76% of Cybersecurity Professionals Believe AI Should Be Heavily Regulated

Gen Z fears AI taking their jobs, older workers less - Fortune

Building a job-hunting assistant using the Coze no-code agent framework. - Hacker Noon

Jamie Dimon says the AI generation of employees will work 3.5 days a week and live to 100 - Fortune

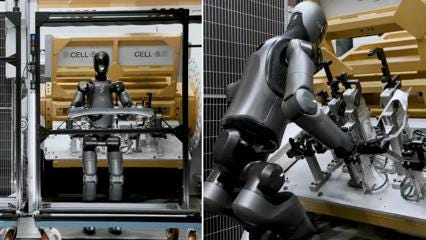

Figure robot is shown speeding up work at a BMW plant - Interesting Engineering

BMW seems to indicate testing not currently under way, awaiting next steps.

Luma text-to-video ships iOS app, web app - VentureBeat

Nvidia launched a music / sound model - NVIDIA Blog

AI culture war meets Coke: "It's NOT the real thing" - Yahoo News

Survey says Gen Z is Gen AI - Quartz

Maybe the generation after them will actually be labeled the ‘AI generation’.

Virtual vixens: AI is the future of OnlyFans - Yahoo Tech

Marc Benioff thinks we've reached the 'upper limits' of foundation models, the future, is AI agents - Yahoo Tech

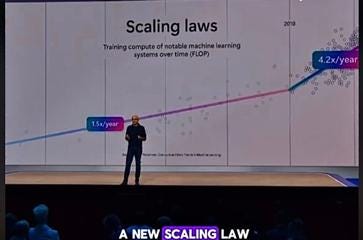

Gary Marcus straw-mans scaling laws - Gary Marcus

Everyone who has ever talked about scaling laws said they are empirical regularities, no one knows how far they go.

You are an engineer working on LLMs, you ask yourself whether it's more efficient to scale up training compute, inference, etc., you express the question as a 'scaling law', i.e. a linear relationship. 'Law' is ofc a bad word to talk about an empirical parameter estimate with laymen and morons.

There's a ridiculous level of hype but when you take the anti-AI position to argue with straw men or against visible facts, it does a disservice to people trying to make decisions, and becomes another form of grift.

If we hit a wall on model size is, the upside is, gives time to breathe and put in safeguards, limits the unbounded growth in energy going into models, still have 10 years of work to exploit current AI capabilities. Also, how hilarious would it be if Elon Musk’s billions and 200,000 GPUs were for nought.

PlayAI closes $20m to join text-to-speech race with ElevenLabs and others - TechCrunch

OpenAI released 2 papers on red teaming. - OpenAI

I wonder who he could be referring to …

LLMs skills emerge in strange discontinuous ways - Decrypt

Google sponcon: The AI-Powered Future of Drug Discovery - The Atlantic

Intel grant curtailed - NY Times

Meta's military Defense Llama chatbot said to dispense dangerous and irresponsible advice. - The Intercept

$10b data center planned for Wyoming ranch. - NY Times

AI-enabled shopping carts. - CBS

A brain the size of a universe and I'm just a manically depressed robot - Reddit

Follow the latest AI headlines via SkynetAndChill.com on Bluesky